The past 3-5yrs have seen a rapid adoption of data-driven decision making in the enterprise at unprecedented operational levels of the business. What used to be merely business intelligence for strategic decision making (e.g. formulating enterprise-wide annual operating and cost plan) has now grown to become data consumption-based business operations (e.g. negotiating with a vendor on a purchase order). This is evidenced by the leading cloud data warehouses (like Databricks and Snowflake) choosing a consumption-based pricing model over a data volume or number of users-based model. This shows that the frequency and the operational depth in the utilization of data (through compute) are the best indicators of value-created in an enterprise.

A quick browse-through of their websites highlights both the key industry verticals and business functions that are rapidly turning data-driven. Leading verticals include financial services, health & life sciences, media & communications, e-retail & CPG and manufacturing. Business functions across sales & marketing, supply-chain & procurement, finance & risk and R&D are embracing data-consumption driven operations.

Data consumption is driving business operations away from traditional and static rules-based workflows, where human operators manually wrangled with limited data and drove the workflows towards decisions. In today’s world, business growth no longer needs cost-prohibitive workflow implementations or large teams of human operators.

The efficiency of data consumption has displaced workflow upkeep and large human operator teams to become the key driver of business growth. This has become quite apparent in the enterprise hiring/staffing trends despite growth in the businesses. This data consumption efficiency builds a consistent competitive advantage across thousands of operational decisions taken in the enterprise every day. Several aspects contribution to building data consumption efficiency of an enterprise – here are some important ones:

Cloud data warehouses are leading the paradigm shift in data infrastructure needed for scaling-up data consumption for business operations. They are reorganizing the enterprise’s cloud compute infrastructure, and driving the shift from rules-based vanilla workflows to data consumption-based business operations. This makes them radically different from traditional data warehouses of the yester years – in fact, it is misleading to even use the same phrase ‘data warehouse’ to describe cloud data warehouses; and hence the industry has coined new words like ‘lakehouse’. The crux of the paradigm shift is the innocuous-sounding change from the traditional extract-transform-load (ETL) to the new extract-load-transform (ELT) approach.

This paradigm shift is delivered by modern cloud data warehouses by making the following three foundational shifts in their architecture:

| Shift from the ETL world | to the ELT world |

| Single Sandwiched “Transform” in ETL Rigid, single, enterprise-wide transformation of data to serve all decision-making needs in one go before loading it in the traditional ETL approach. | Multiple Business-specific ELT “Transform”s Multiple, agile, business-specific transformations of pre-loaded data in the ELT approach drives the scaling-up of compute infrastructure for consumption. |

| Rigid “Load” with Meta Data Schemas Rigid meta data management schemas of traditional warehouses attempted to crunch all business decision needs. | Reliable “Load” with Data Catalogs Pre-loading of data into the cloud data warehouse focuses more on data quality and reliability within the constructs of data catalogs (e.g. Unity from Databricks and Polaris from Snowflake) offering immense flexibility to manage structured, semi-structured and unstructured data. |

| “Extraction” for Transformation The rigid transformation process drove brittle “data pipes” connecting data sources (e.g. ERP systems like SAP) to traditional warehouses. | “Extraction” for Efficient Sharing with Data Catalog The data quality and reliability requirements of catalogs can be brought to the data sources creating efficient and reliable data sharing directly from the sources (e.g. Delta sharing from SAP to Databricks). |

Data engineering defines, develops and deploys the transformation of data; and has traditionally been an organization within corporate IT tasked with the maintenance of the ETL data warehouse. However, with data consumption driven business operations, the single enterprise-wide data transformation is replaced by multiple business-specific transformations. Hence, data engineering is expanding from being a mere IT function to becoming an important part of business operations. The traditional shared data engineering within IT is now tasked with managing the shared data sources (the new “extract”) and the data catalogs (the new “load”).

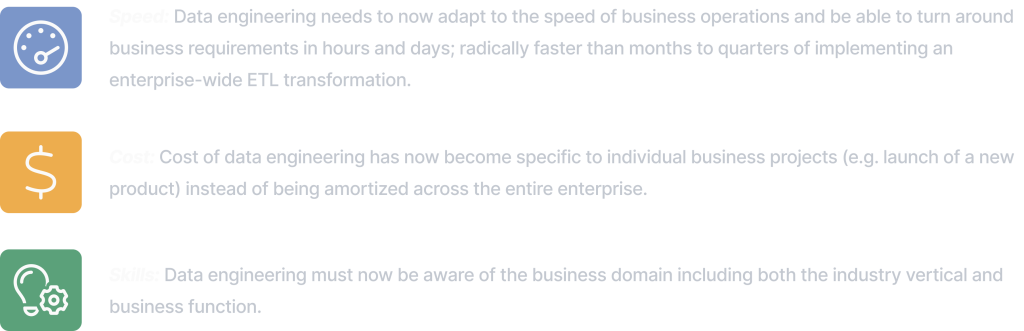

The multitude of business-specific data transformations needs a new business-driven data engineering function with distinct features to empower data consumption at scale:

Both enterprise customers and vendors of the modern cloud data warehouses are grappling with the delivery of business-driven data engineering. Shared data engineering teams in IT organizations are stretched thin and struggle to meet the pressing data consumption demands from the business. There is a whole mushrooming cottage industry of mid-sized system integrators, who have emerged as stop-gap intermediary service providers between the business and IT organizations within enterprises. The challenges in scaling-up business-driven data engineering are constraining the utilization of modern cloud data warehouses and denting the data consumption efficiency of the business. This has opened the demand and a new market opportunity for on-demand business-driven data engineering.

In the next blog, we introduce Purgo AI – the industry’s first on-demand data engineering product for the business user – that address this demand and fulfills the vision of data consumption driven business operations.